Chapter 2

The Building Blocks of CDR Systems

↧

Authors

The previous chapter explained why carbon dioxide removal is necessary to combat global temperature increases, what constitutes negative emissions, and the connection of CDR to the global carbon cycle. It also discussed the potential benefits and costs of negative emissions, especially in the context of social justice. This chapter provides an overview of the types of CDR approaches that have been developed or are being developed today. Together, they comprise a portfolio of approaches, or “building blocks,” for CDR systems.

As introduced in Section 1.2, the concept of “potential CDR” is critical when evaluating specific CDR approaches. While all the approaches discussed in this chapter have the capacity to achieve net-negative emissions, they will not do so under all conditions. Life cycle analysis, discussed in detail in Chapter 4, provides one tool for evaluating whether net negativity can be achieved under different deployment assumptions.

This chapter introduces each of the potential CDR approaches in Figure 2.1. The approaches this book explores all have unique dynamics with respect to the stocks and flows of carbon, and the resulting form of carbon storage. These characteristics put key constraints on large-scale CDR deployment, suggest the need for a strong portfolio of multiple CDR approaches and inform policy and governance frameworks. The CDR approaches discussed in this chapter include:

CO2 Mineralization: processes by which certain minerals react and form a bond with CO2, removing it from the atmosphere and resulting in inert carbonate rock.

Ocean Alkalinity Enhancement: increasing the charge balance of ions in the ocean to enhance its natural ability to remove CO2 from the air.

Soil Carbon Sequestration: the use of land or agricultural practices to increase the storage of carbon in soils.

Improved Forest Management: land management practices designed to increase the quantity of carbon stored in forests relative to baseline conditions (e.g., by modifying harvest schedules).

Afforestation and Reforestation: These strategies involve growing new forests in places where they did not exist before (afforestation) or restoring forests in areas where they used to grow (reforestation).

Coastal Blue Carbon: techniques that utilize mangroves, tidal marshes, seagrass meadows, and other coastal habitat to increase carbon-removing biomass and, in particular, soil carbon.

Biomass Storage: the conversion of biomass into derived materials with more durable storage than the biomass source, including using pyrolysis to convert biomass into bio-oil (fast pyrolysis) or biochar (slow pyrolysis).

Biomass Energy with Carbon Capture and Storage (BECCS): a form of energy production that utilizes plant biomass to create electricity, hydrogen, heat, and/or liquid fuel. This process simultaneously captures and sequesters some portion of the carbon from the biomass for storage.

Direct Air Capture (DAC): a process that removes CO2 from ambient air and concentrates it for storage deep underground or use in a wide variety of products.

Geological Storage: the injection of CO2 into a geologic formation deep underground for essentially permanent timescales. This activity is not considered a CDR approach by itself, but rather, is a way of safely storing carbon removed from the atmosphere through DAC and BECCS.

2.1 →

CDR approaches considered within the primer (NASEM, 2019)

CDR approaches considered within the primer (NASEM, 2019)

These approaches vary in their capacity, cost, permanence, and storage dynamics, and thus may play complementary roles. For example, DACCS provides a flux of CO2 from the atmosphere and, through geological storage, provides an effectively permanent form of storage (secure carbon storage deep underground over a period of thousands of years or more). Forest growth increases the flux of CO2 from the atmosphere into tree biomass, but it remains prone to decomposition, fire, and other loss on decadal/century timescales. As such, afforestation and reforestation provide a moderate level of permanence, often have a lower cost, and are generally constrained by available land.

In summary, each approach functions as a complete system, is organized into individual component parts, and contributes to a broad portfolio of CDR approaches. These dynamics form the fundamental understanding of each potential CDR system and frame the rest of this section.

This chapter examines each of the CDR approaches described above, their current research, development, and deployment status, and the predicted scale of their potential for removing CO2. This chapter also explores significant considerations for scale-up and the aforementioned duration of storage potential – including land constraints, environmental constraints, energy requirements, supply chain limitations, costs, physical barriers, the permanence (or reversibility) of CO2 storage, monitoring, verification, and governance. Each section then discusses the costs of each option, including current and projected costs and financial incentives for deployment. Finally, for each option, this chapter offers a perspective on existing “landmark” projects, or in some cases, potential future projects, to inspire new thinking within the field, in addition to assisting in addressing some current challenges.

2.1

2.1 —

CO2 mineralization

2.1.1

Introduction

Mineralization of CO2 is a process that reacts alkaline material with CO2 to form solid carbonate minerals, for CO2 removal from air, for stable and permanent carbon storage, or for post-processing, where the alkaline agents are separated and the CO2 is stored elsewhere. (Jorat et al., 2015a; Jorat et al., 2015b; McQueen et al., 2020a). Sources of alkalinity (i.e., Mg2+- and Ca2+-rich materials) can be naturally occurring silicate minerals (such as olivine or wollastonite and serpentine group minerals) or waste material from industrial or mining operations (e.g., steelmaking slags and nickel mine tailings) (Mayes et al., 2018; Renforth, 2019). See Section 2.1.3 to learn about challenges specific to scaling carbon mineralization.

Carbon mineralization occurs naturally in alkaline environments. Atmospheric CO2 reacts with rocks (particularly mafic and ultramafic rocks rich in magnesium and calcium, such as basalts, peridotites and serpentinites) to precipitate carbonates. The rate of mineralization is fast on a geological timescale and could be enhanced for CDR for climate change mitigation (Kelemen et al., 2011; Kelemen and Matter, 2008). As recently reviewed by Kelemen et al. (Kelemen et al., 2019, 2020 and NASEM 2019b), engineered mineralization of CO2 may be applied to geologic formations rich in alkalinity (e.g., basalt and peridotite) and to alkaline industrial wastes by reaction with CO2 via (a) ex-situ processes (in high pressure and/or high-temperature reactors), (b) surficial processes (ambient weathering or sparging of CO2-enriched gas or fluid through ground materials), or (c) in-situ processes (sending CO2 -rich waters or fluids underground to react with alkaline minerals below the surface). These methods, visually represented in Figure 2.2, may be applied to achieve:

CO2 storage via reaction of solid feedstock with fluids or gases already enriched in CO2 by some other process;

CDR from air (i.e., DAC); or

Combined CDR and storage.

In the latter two cases, CO2 would be sourced from air in ambient weathering, or from CO2-bearing surface waters.

2.2 →

Visual representation of in-situ, ex-situ, and surficial processes

Visual representation of in-situ, ex-situ, and surficial processes

Ex-situ opportunities involve extracting and grinding minerals for reaction with CO2. The CO2 source to which mineralization is paired determines whether the process achieves negative emissions. Mineralization can be configured to react with pure CO2, or instead with flue gas as a method of post-combustion capture. This results in reduced CO2 emissions and CO2 storage, but not in negative emissions – i.e., it prevents new CO2 from entering the atmosphere but does not remove CO2 already present.

To achieve negative emissions, alkalinity must be reacted directly with CO2 from ambient air or CO2 separated from the air, for example, by using synthetic sorbents or solvents. One can view some proposed DACCS processes as ex-situ mineralization methods, but conventionally-proposed methods termed ex-situ mineralization involve the extraction of the mineral and reaction off-site and at times with the use of reactors capable of achieving engineered pressure and temperature conditions for enhanced reactivity.

Surficial methods for negative emissions are generally considered in terms of ambient weathering, since sparging of ground or pulverized materials using CO2-enriched fluids or gases might be subject to leakage and CO2 loss. Past studies of surficial methods have commonly focused on existing mine tailings of mafic and ultramafic rocks (mined for commodities such as nickel, platinum group elements (PGEs), and diamonds) (Harrison et al., 2013; Mervine., 2018; Power et al., 2013; 2014; Wilson et al., 2009; Wilson et al., 2011), and on alkaline industrial wastes, since these materials are already present at the surface with an appropriate grain size or particle size of the mineral feedstock. Experiments have shown that reacting ultramafic mine tailings with a 10 percent CO2 gas mixture and aerating the tailings can increase the mine tailings’ rate of capturing CO2 by roughly an order of magnitude (Hamilton et al., 2010; Power et al., 2020). More recent work extends ideas on surficial mineralization to processes that mine and grind rocks for the purpose of DACCS – possibly with nickel or PGEs as a byproduct – and to processes that recycle alkalinity by heating weathered, carbonated materials to produce CO2 for offsite storage or use, and then returning metal oxides back to the surface for another cycle of DACCS via ambient weathering (Kelemen et al., 2020; McQueen et al., 2020a).

Early investigations of in-situ, subsurface mineralization focused on CO2 storage via circulation of CO2-rich fluids through reactive rocks, such as what is practiced at the CarbFix project (Aradóttir et al., 2011). More recently, a few studies have provided rate, cost, and area estimates for in-situ DACCS via mineralization, circulating surface waters through reactive aquifers to achieve capture and permanent storage of dissolved CO2, and then producing CO2-depleted waters at the surface, where they will draw CO2 from the air.

In the context of in-situ mineralization, investigators are just beginning to consider hybrid methods involving the concentration of CO2 up to 10 – 20 percent purity via DACCS, dissolution of CO2 into fluids, and further CO2 capture and storage via subsurface circulation of fluids through reactive rock formations. Optimal combinations of DACCS and in-situ mineralization may exist because – for example – enrichment of air to 5 wt%CO2 is significantly less energy-intensive (Wilcox et al., 2017) and subsequently less costly, compared to enrichment to >95 percent CO2 (Kelemen et al., 2020).

2.1.2

Current status

As outlined in the previous section, there are three primary ways by which negative emissions can be achieved from mineralization: ex-situ methods, surficial methods and in-situ subsurface methods.

The general mechanism of carbon mineralization involves a pH-swing in which alkaline cations (i.e., Mg2+ and/or Ca2+) are released from their silicate structure by dissolution in aqueous fluids at low pH and subsequently react with aqueous CO2, forming carbonates at high pH. Such a swing can be engineered in ex-situ systems (e.g., Park and Fan, 2004), but also arises spontaneously in natural weathering of peridotite (Bruni et al., 2002; Canovas III et al., 2017; Paukert et al., 2012; Vankeuren et al., 2019).

Two primary schemes have been proposed to achieve ex-situ CO2 mineralization for storage. Direct mineralization is a one-step process that reacts CO2 with the alkaline feed. While direct mineralization is a simpler method, the full extent of the reaction may be difficult to achieve on the timescales for optimal ex-situ processes, depending on the nature of the feedstock. Some alkalinity sources contain rigid silicate structures, which cause transport limitations associated with the dissolution of calcium or magnesium cations into solution. Given that these reactive ions are the largest atoms in the silicate mineral structure, their release into solution requires distortion of the crystal lattice, which is more difficult in rigid structures. For this reason, the surface cations are often the most available for reaction, after which a passivation layer is created, which limits further transport of the underlying cations (NASEM, 2019). Further, the presence of other species such as iron oxides or silicates can decrease final product purity, which is important in some further uses of the product, such as use as synthetic aggregate. To mitigate these factors, indirect mineralization extracts alkalinity from the feed and converts it into a form that is more reactive with CO2, thus indirectly reacting the alkaline source with CO2. Separation of the alkalinity is also commonly invoked in proposed, indirect mineralization processes, to remove undesired species initially present in the feed and to increase product purity. Currently, ex-situ mineralization methods are much more expensive than storage of supercritical CO2 in subsurface pore space (space between the grains of rock), and, for both surficial and in-situ, than subsurface mineralization. Thus, over the past couple of decades, ex-situ applications have focused on the possibility of selling mineralized material as value-added products, such as building materials (Huang et al., 2019; Ostovari et al., 2020; Pan et al., 2020; Woodall et al., 2019).

Because this primer concerns carbon dioxide removal, whereas ex-situ methods are generally designed to store emissions captured elsewhere, below we focus more on surficial and in-situ systems in which the pH swing arises from fluid-rock reactions rather than use of low- and high-pH fluid reagents.

Coupling of DAC and mineralization is being explored in the CarbFix2 project in Iceland, where a DACCS unit has been installed at Hellisheiði Geothermal Power Station (Snæbjörnsdóttir et al., 2020). However, this could potentially have less impact due to the extensive energy required for DACCS with synthetic sorbents or solvents, and subsequent compression of CO2.

Another option is to allow alkaline materials to passively react with atmospheric CO2 via ambient weathering at the surface (Harrison et al., 2013; McQueen et al.; 2020a; Mervine et al., 2018; Wilson et al., 2009). Pilot experiments on one kind of surficial method are being carried out at several mines by the De Beers Group of Companies, which aim to make ten of their mines carbon-neutral within the next five years (Mervine et al., 2018). Here, the mineralization process’ overall carbon footprint can be perceived as negative or neutral depending on how the system boundaries are framed. Example system boundaries include: whether the tailings require preprocessing, or stirring, or the type of energy required for potentially concentrating and mixing CO2 in air with the tailings. In addition, the mine tailings are drawing CO2 from ambient air, thus removing the CO2 from the atmosphere. However, there are additional emissions associated with power generation for all the mine’s normal operations. De Beers frames its boundaries in this light, aiming for its mineralization system to counteract day-to-day emissions. A large, UK-funded project is evaluating advanced weathering via addition of mafic rock material (basalt) to agricultural soils, with the hope that this might also increase crop productivity (Beerling et al., 2018).

More ambitious systems intended to reach the scale necessary to remove billions of tonnes of CO2 from air each year, via surficial weathering of alkaline industrial wastes and mined rock material, are under consideration at the lab and theoretical level, but they have not yet been implemented at pilot scale. Similarly, ideas about in-situ mineralization for DACCS have been evaluated by lab and modeling studies but have not yet been tried at the field pilot scale.

2.1.3

Predicted scale and storage potential

Solid industrial alkaline wastes could effectively store up to 1.5 GtCO2 per year based on current production and possibly more than 3 GtCO2 per year based on future forecasts (Kelemen et al., 2019b; Renforth, 2019). Note that these are estimates of storage capacity; reaction rates could limit these values significantly. Mineralization for storage alone, using high concentrations of CO2 captured elsewhere, would be much faster than mineralization via ambient weathering of most alkaline industrial wastes. Opportunities to use wastes for storage or DACCS vary geographically, as they are widely dispersed among different mines and industrial facilities, and some are already sold, for example, to be used in concrete mixtures as supplementary cementitious materials. These materials exhibit properties similar to ordinary Portland cement, but because their production does not result in CO2 emissions, their use in the production of concrete leads to a reduced carbon footprint. Alkaline industrial wastes sometimes contain hazardous asbestiform chrysotile or heavy metals. In many cases, the associated environmental hazards can be ameliorated through carbon mineralization. Natural sources of alkalinity (e.g., serpentine group minerals, basalt, or peridotite) are abundant in Earth’s crust, and could store on the order of 10 GtCO2 per year, as shown in Figure 2.3. In this case, storage capacity estimates include both the overall size of the potential CO2 reservoir and achievable rates, using reactants with both high CO2 concentrations (ex-situ processes, in-situ basalt, and peridotite storage) and ambient air (enhanced weathering processes).

2.3 →

Summary of annual storage potential versus cost in US$/tCO2 for proposed solid storage using fluids enriched in CO2. NOTE: Costs should be compared to the cost of storage of supercritical CO2 in subsurface pore spaces, ~$10-20/tCO2 (NASEM, 2019).

Summary of annual storage potential versus cost in US$/tCO2 for proposed solid storage using fluids enriched in CO2. NOTE: Costs should be compared to the cost of storage of supercritical CO2 in subsurface pore spaces, ~$10-20/tCO2 (NASEM, 2019).

The CarbFix and Wallula CO2 storage projects (reviewed in Snæbjörnsdóttir et al., 2020 and Kelemen et al., 2020) have demonstrated the potential of CO2 storage via in-situ mineralization by injecting CO2-enriched, aqueous fluids into underground basaltic reservoirs, where it is rapidly precipitated in carbonate minerals (Matter et al., 2016). This provides a safe, permanent storage solution for the captured carbon where the caprock integrity might be less important over the long time frame than in sedimentary formations (Sigfusson et al., 2015). (See Section 3.9 on geological storage.) The caprock is a primary trapping mechanism by which the CO2 is physically trapped in the subsurface due to low-permeability overlying rock. It is important to note, however, that the CO2 injection process at CarbFix is “piggybacked” on the reinjection of spent geothermal fluid, which is required to not impact freshwater. To ensure the protection of freshwater, monitoring is required. During the injection process, the pressure is increased, and if the caprock is not effective, the elevated pressure may lead to physical leakage, either of the re-injected geothermal fluid or CO2. At CarbFix, condensation of natural steam in the geothermal power generation cycle produces “non-condensable gases,” mainly CO2 and H2S. The CarbFix project currently captures and stores about 12,000 tonnes of CO2 annually, with the aim to increase injection by a factor of about three, to about 90 percent of the CO2 emissions from the Hellisheiði geothermal power plant before 2030. In addition to enriched CO2, injected fluids in CarbFix2 contain an equivalent enrichment of H2S, which reacts with basalt to form sulfide minerals, thus mitigating the environmental impact of geothermal H2S as well as CO2 emissions.

The total amount of CO2 that could be stored globally in mafic and ultramafic rocks has been estimated to be around 60,000,000 GtCO2 (Kelemen et al., 2019b), about half in onshore reservoirs within a few kilometers of the Earth’s surface. Other estimates focused on the upper-bound capacity of submarine lavas in oceanic crust suggest that 8,400 – 42,000 GtCO2 could be stored in seafloor aquifers along mid-oceanic ridges, and 21,600 – 108,000 GtCO2 in basalt aquifers associated with intra-plate volcanism (Goldberg and Slagle, 2008).

2.1.4

Current challenges to deployment

Limitations of this technology are related to accessibility, reaction kinetics, and diffusion. While it is estimated that the magnitude of alkalinity sources available for CO2 mineralization is sufficient for significant CO2 storage, complications arise with the location of such sources. Most basalts and ultramafic rocks are on the ocean floor. However, significant volumes of continental flood basalts and ultramafic rocks are on land. (See Chapter 3, Figure 3.5.) For natural silicates, it is estimated that hundreds of trillions of tonnes of rock are available (NASEM, 2019). For instance, the largest ophiolite in the world is the Samail ophiolite. It is a 350 km x 15 km peridotite massif in Oman. Considering accessible depths down to 3 km, and that 1 m3 of rock weighs about three tonnes, about 47,000 Gt of peridotites are accessible for carbon mineralization in the Samail ophiolite. The magnesium atoms in the ophiolite represent roughly 40 – 45 percent of the rock. If all magnesium atoms are reacting with CO2 to form carbonates, this ophiolite alone could sequester about 20,000 GtCO2. Other ophiolites worldwide could increase that number by a factor of five or more, raising the sequestration potential of peridotites to more than 100,000 GtCO2. This estimate does not include seafloor peridotites near oceanic ridges, which have an even greater potential than onland massifs (Kelemen et al., 2011). Locating and mining so much feedstock would be expensive both economically and energetically and would potentially require single-purpose use of huge areas. Alternatively, some proposed technologies would circulate CO2-bearing fluids and/or gases into subsurface rock formations, rather than mining and grinding gigatonnes of rock (Kelemen and Matter, 2008).

Laboratory-scale studies have shown that mafic and ultramafic materials in general react much more quickly with CO2 than sedimentary rocks in proposed reservoirs for storage of supercritical CO2 in the pore space of the rocks (void space between the mineral grains) (Kelemen et al., 2019; NASEM, 2019), dramatically increasing the permanence of CO2 storage in the pore space of these formations. Supercritical CO2 is a fluid in which CO2 exhibits both gas and liquid properties, and in this phase makes it less susceptible to leaking from the reservoir. In particular, pilot-scale studies have shown that CO2 injected into basalt formations can be mineralized in about a year. (See Section 3.1.1.) While natural systems are supply-limited and record CO2 mineralization over millennia, the results of the CarbFix experiment confirm theoretical estimates that – if rapid fluid circulation can be achieved and sustained in the target rock formations – all CO2 will be removed from circulating surface waters to form solid carbonate minerals on a timescale of years.

An important parameter for ex-situ and surficial mineralization is the grain (particle) size of the feedstock, where the optimal size for some feedstocks has been estimated to be ~100 µm (Sanna et al., 2013) for ex-situ processes. Crushing and grinding of mined minerals is both energetically and economically intensive, so it is advantageous to use an alkalinity source that is already of sufficient particle size/reactivity, like some industrial and mining wastes (Kelemen et al., 2020).

Popular alkalinity extraction methods for the first step of indirect, ex-situ CO2 mineralization involve the use of acids, salts, and/or heat. Strong acids extract alkalinity at sufficient rates but present environmental and health hazards. Some weak acids have been explored and have shown some success but are not as effective (Teir et al., 2007). Ammonium-based salts have also been successful on small scales (Zevenhoven et al., 2017), but an economically-integrated process has yet to be proven. This is partly due to the high expense of the salts and the energy-intensive salt regeneration step. This process is also known as the Abo Akademi process, which works by reacting an alkaline silicate mineral with an ammonium sulfate salt at a temperature above 400º Celsius (Zevenhoven et al., 2017). This process produces alkaline sulfates that are water soluble, allowing them to be dissolved and separated from the remaining insoluble waste rock. The produced alkaline solution can then be carbonated by bubbling CO2 through the solution to reach saturation and precipitating the carbonate species.

For the carbonation step of indirect mineralization, limitations are dominated by diffusion. Diffusion of CO2 into ex-situ, aqueous systems can be accelerated by decreasing the diffusion length scale via sparging (e.g., Gunnarsson et al., 2018) and/or stirring (e.g., Gadikota, 2020), and/or by increasing the chemical potential gradient via introduction of CO2 at an elevated partial pressure (Gadikota, 2020).

2.1.5

Current and estimated costs

According to NASEM (2019), the cost to store one tonne of CO2 from air via carbon mineralization lies between $20 and 100 per tCO2. Within this range, storage of carbon within mine tailings can be carried out at a relatively low cost, but it provides minimal storage capacity. Figure 2.4 illustrates storage potential versus cost.

2.4 →

The mechanism of trapping changes over time from structural to solubility to mineral, leading to increased permanence and decreased risk (Figure 8 in Kelemen et al., 2020; Figure 5.9 in IPCC, 2005a; Figure 6.7 in NASEM, 2019; Figure 9 in Snæbjörnsdóttir et al., 2017)

The mechanism of trapping changes over time from structural to solubility to mineral, leading to increased permanence and decreased risk (Figure 8 in Kelemen et al., 2020; Figure 5.9 in IPCC, 2005a; Figure 6.7 in NASEM, 2019; Figure 9 in Snæbjörnsdóttir et al., 2017)

Most ex-situ mineralization methods for CO2 storage are significantly more expensive than storage of supercritical CO2 fluid in subsurface pore space (~ $10 – 20/tonne CO2, NASEM 2019, Chapter 7). As a result, storage of CO2 via mineralization may not be cost-competitive with injection into unreactive storage rock in many places. However, in places where there is no appropriate reservoir for long-term, subsurface storage of large amounts of supercritical CO2 in pore space, mineralization may be a better storage method. Storage via mineralization may also be advantageous in the long term, as the CO2 is molecularly bound in solid minerals that are inert and pose little risk of groundwater contamination.

It is important to note, again, that some proposed mineralization methods use ambient weathering or subsurface reactions to achieve CDR. As such, their costs should be compared with estimates for more highly engineered methods for DAC with solid sorbents or solvents, which are much higher than cost estimates for storage alone.

Specifically, mining and grinding of rock for the primary purpose of DACCS via ambient weathering has similar costs to engineered DACCS using synthetic sorbents. However, the area requirements for capture and storage of CO2 in ground rock material or alkaline industrial wastes on the surface at the scale of gigatonnes per year are very large—much larger than for DAC using synthetic sorbents coupled with subsurface CO2 storage. Recycling alkalinity may greatly reduce the cost of feedstocks, and the long-term area requirement, per tonne of CO2 net removed from air for DACCS via ambient weathering. As a simple example, this might be achieved by weathering MgO to produce MgCO3; calcining MgCO3 to produce MgO plus CO2 for offsite storage or use; and redistribution of produced MgO for another cycle of weathering. While there are many uncertainties involved, this “MgO looping” method currently has the lowest peer-reviewed cost estimates of any proposed DAC method. Finally, in-situ, subsurface carbon mineralization for DAC coupled with solid storage may be less expensive than many surface approaches, and requires far less land, but there is uncertainty about permeability, surface reaction rates, and potential surface area.

2.1.6

Example projects

The following project examples all have the potential to be carbon dioxide removal, provided that the appropriate steps are followed and that the CO2 is sourced from either BECCS (3.7) or DAC (3.8). In 2013, nearly a kilotonne of pure, supercritical CO2 liquid was injected into the Columbia River flood basalts near Wallula, Washington, in the United States, in two brecciated (broken up or fragment rock) basalt zones at a depth of 800 – 900 meters. Subsurface reactions between the CO2, formation waters, and basaltic rocks were monitored. Some solid carbonate reaction products were observed in rock core samples, but the proportion of CO2 that has been mineralized, versus dissolved in aqueous fluids within the basalt rock, is unknown. Extensive monitoring several years after injection confirmed that no substantial physical leakage of CO2 had occurred from the storage formation since its injection. Injection of CO2 from a second geothermal plant in the area is scheduled to begin in 2021.

CO2 mineralization can be made profitable, or at least less expensive, by selling the carbonate products for use in the construction industry (Chapter 5). There are several examples of how this is currently being done, some of which are finalists in the Carbon XPRIZE competition (XPRIZE, 2020). CarbonCure, a Carbon XPRIZE finalist based in Nova Scotia, Canada, injects CO2 into concrete during the concrete curing process to enhance cement curing reactions and thus reduce the amount of calcined material used in concrete. The CO2 reacts with calcium to form nanocrystals of calcium carbonate that seed conventional cement hydration reactions (Penn State, College of Engineering, 2020), which take place once cement has been mixed with water and result in the formation of products that contribute to the short- and long-term characteristics of Portland cement concrete.

The process results in enhanced-hydration products and a stronger overall concrete that requires 7 – 8 percent less Portland cement, decreasing concrete’s overall carbon footprint by 4.6 percent (Monkman and MacDonald, 2017). The CO2 used by CarbonCure is sourced from industrial emitters, collected and distributed by gas suppliers, and stored in pressurized vessels at concrete plants. The technology is easily retrofitted at a concrete plant and has already been installed across North America and Asia (CarbonCure Technologies, 2020). Alternatively, Blue Planet Ltd, located in California, dissolves CO2 from flue gas CO2 into an ammonia solution to carbonate a stream of Ca2+ ions (originating from alkaline industrial waste), forming synthetic limestone. Small aggregate substrates present in the reactor are coated with synthetic limestone, creating layered aggregates. The carbonate layers of the aggregate products are 44 wt%CO2 and can be produced in sizes ranging from that of sand to gravel (Blue Planet Ltd., 2019).

As discussed previously for CO2 mineralization, these examples of CarbonCure and Blue Planet Ltd could achieve negative emissions only if using a CO2 stream from a DAC process, which is not currently the case. However, using CO2 mineralization to store CO2 captured from air could broaden the possible opportunities for DAC in the absence of other nearby storage options, while also providing economic value by selling building materials.

Areas for potential improvement of proposed in-situ mineralization methods include a deeper understanding of the characteristics of possible reservoirs (nano-to-kilometer scale), the distribution of the reaction products in such reservoirs, the reaction rate of target minerals, the evolution of permeability and pressure in the reservoir, the large-scale impact of the chemical physics processes leading to clogging or cracking, and the effect of potential geochemical contamination of aquifers and surface waters. The deployment of subsurface CO2 storage, via mineralization or in pore space, would also require a change in regulations to include a larger variability of storage options, with requirements corresponding to the type of rocks (Kelemen et al., 2019; NASEM, 2019). For example, the CarbFix pilot project has demonstrated that an impermeable caprock might not be necessary to ensure the permanence of storage in basalt formations, due to rapid mineralization of CO2 dissolved in injected fluids (solution trapping followed by carbon mineralization) (Matter et al., 2016; Sigfusson et al., 2015). Because CO2-free fluids may then be recycled, initial concerns about water consumption for solution trapping seem to have been addressed (Sigfusson et al., 2015).

2.2

2.2 —

Ocean alkalinity enhancement

2.2.1

Introduction

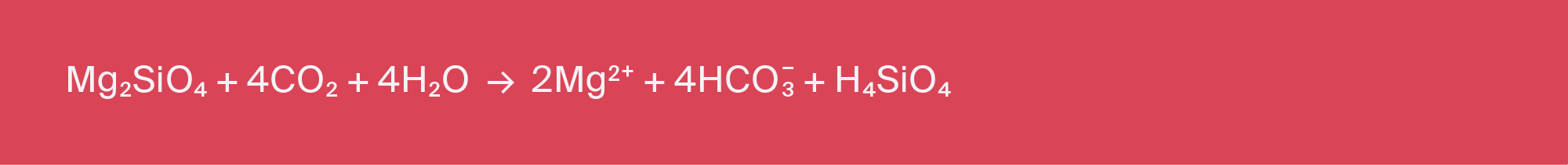

The ocean contains approximately 40 trillion tonnes of dissolved carbon, mostly in bicarbonate ions (HCO3-) with lesser amounts of carbonate ions (CO32-). These anions exist in charge balance with dissolved cations in the ocean (Ca2+, Mg2+, Na+, K+). Natural weathering promotes the release of cations via dissolution of carbonate minerals (Equation 2.1) or silicate minerals (Equation 2.2), and reaction with aqueous CO2. In solutions in contact with air (rivers, lakes, and the surface of the ocean), the required aqueous CO2 is provided by uptake of CO2 from the atmosphere.

2.1 →

2.2 →

Methods to accelerate this process and capture and store atmospheric CO2 in the ocean as dissolved carbonate and bicarbonate ions have been proposed for more than 25 years (as reviewed in Renforth and Henderson, 2017), and include CDR strategies that propose adding crushed minerals directly to the land surface or oceans, or weathering of minerals in seawater reactors. Like carbon mineralization, these methods accelerate natural weathering mechanisms. However, rather than promoting the formation of carbonate minerals, the carbon dioxide is converted to dissolved bicarbonate (HCO3-), which would reside in the ocean. The chemistry of the surface ocean is a strong inhibitor of solid carbonate formation (thus preventing the reversal of reaction in Equation 2.1). As a result, every mole of Ca2+ or Mg2+ dissolved is charge-balanced at near-neutral pH by almost two moles of CO2 dissolved as HCO3-. In detail, there are between 1.5 and 1.8 moles of CO2 dissolved, rather than 1:1 in solid CaCO3 or MgCO3. (As an aside, note that looping of alkalinity, repeatedly recycling CaO or MgO to capture CO2 from air via enhanced weathering as described in Section 2.1, could greatly increase the number of moles of CO2 removed from air, per mole of initial feedstock.)

In addition to CO2 capture from air, ocean alkalinity enhancement could be an important part of an environmental management strategy designed to ameliorate the impacts of ocean acidification (in addition to the impacts of CO2 reduction from the atmosphere, Rau et al., 2012). However, the technologies to do this remain largely untested beyond the laboratory, and the environmental consequences of elevated ocean alkalinity are poorly understood.

2.2.2

Current status

Currently, ocean alkalinity enhancement is practiced on a small scale by commercial seafood companies and aquaria to mitigate the effects of ocean acidification on shell-forming organisms. Sodium hydroxide, sodium carbonate or limestone are the sources of increased alkalinity, and the net carbon balance – CO2 uptake from air versus input of CO2 from carbonate feedstock – has not been considered.

2.2.3

Predicted scale and storage potential

If the impacts were limited only to increasing ocean alkalinity, and if those impacts were instantaneously distributed throughout the global surface ocean, then it could be possible to store trillions of tonnes’ worth of CO2 without surpassing carbonate saturation states that have been present in the open oceans over the past ~800,000 years, as shown in Figure 2.5. (Hönisch et al., 2009). However, the total storage potential constrained in this way depends on atmospheric CO2 concentration. A larger atmospheric CO2 level corresponds to a greater capacity for increasing ocean alkalinity (Renforth and Henderson, 2017). In practice, the storage potential may be limited by the capacity to create technologies at scale, for example, to distribute metal oxides at low concentration over a large area, or the environmental impact around an area where a large amount of metal oxides is introduced.

2.5 →

a) Calcite saturation reconstruction of the surface ocean over the last 800,000 years (Hönisch et al., 2009); b) projection of future surface ocean saturation states as a consequence of an RCP8.5 emission scenario (red line) and the change in saturation state if that emission scenario was wholly mitigated by increasing ocean alkalinity (dashed line, equivalent of ~5,000 GtCO2 storage, adapted from Renforth & Henderson (2017)

a) Calcite saturation reconstruction of the surface ocean over the last 800,000 years (Hönisch et al., 2009); b) projection of future surface ocean saturation states as a consequence of an RCP8.5 emission scenario (red line) and the change in saturation state if that emission scenario was wholly mitigated by increasing ocean alkalinity (dashed line, equivalent of ~5,000 GtCO2 storage, adapted from Renforth & Henderson (2017)

2.2.4

Current challenges to deployment

Technical challenges for upscaling alkalinity extraction, transport, and treatment are likely similar to those of carbon mineralization (Section 3.1.1). The environmental impacts of these approaches will not be instantaneously distributed across the global surface ocean and depend on the distribution method. One option is to apply crushed minerals or alkaline solutions to smaller parts of the surface ocean and then rely on currents to distribute the impact. As such, there will always be a temporal and spatial gradient of impact between the point of addition and the wider surface ocean. The nature of this gradient is important if the goal is to protect ecosystems from the impacts of ocean acidification and would be a key design parameter in such a scheme. However, excessively localized alkalinity may have undesirable impacts on ecosystems (Bach et al., 2019; Fukumizu et al., 2009). Elevated alkalinity may also promote biological carbonate formation, which would release CO2 (for example via Equation 2.1, read from right to left) and decrease the effectiveness of the storage. (Conceptually, this is similar to leakage from storage of supercritical CO2 in subsurface pore space, in which CO2 is returned to the atmosphere, yet the magnitude and rate of carbonate formation remain poorly understood by both models and experimentation. In addition, some potential mineral feedstocks contain elements that can impact ecosystems (e.g., iron, silicon, nickel), the implications of which are reviewed by Bach et al. (2019). The consequence of elevated alkalinity within a range of minerals may be broad ecosystem shifts to favor mollusks, sponges, diatoms and other marine organisms that use silicate or calcium to grow and develop, which in turn might lead to other ecological impacts.

2.2.5

Proposed methods and estimated costs

Proposals include spreading crushed minerals on the land surface, after which dissolved cations and bicarbonate are transported to the oceans via runoff, rivers, and groundwater (Beerling et al., 2018; Hartmann et al., 2013), adding minerals directly to the ocean (Harvey, 2008; Köhler et al., 2013) or coastal environments (Meysman and Montserrat, 2017; Montserrat et al., 2017), creating more reactive materials for addition to the ocean (Renforth et al., 2013; Renforth and Kruger, 2013), using a reactor to dissolve limestone (Rau, 2011; Rau and Caldeira, 1999) and using electrolysis to create alkaline solutions and neutralizing the produced acidity through weathering (House et al., 2007; Rau et al., 2013). All of these proposals require research to assess their technical feasibility (laboratory and demonstration scale), cost, environmental impact, and social acceptability (Project VESTA, 2020).

Kheshgi (1995) proposed adding lime (CaO) or portlandite (CaOH2) to the surface ocean through the calcination of limestone, combined with flue gas CO2 capture and sequestration. Lime added to the ocean would dissolve and result in an increase in alkalinity. For this process to be carbon-negative, it is essential to curtail the release of CO2 produced during calcination. The techno-economics of this approach were assessed for a range of mineral feedstocks (Renforth et al., 2013). They suggested that lime or hydrated lime production in a kiln with CCS, together with the associated energy costs of raw material preparation and ocean disposal, would require between 2 and 10 GJ per net tonne of CO2 sequestered.

While it is currently not permitted to add materials to the ocean (IMO, 2008, 2003), large parts of the supply chain (mineral extraction, transport, calcination) to do so already operate at global scale. If these could be adapted and expanded, it may be possible to achieve GtCO2/yr CDR relatively quickly. As such, the net negative balance of ocean liming may be limited only by the ability to deploy CCS on existing and new facilities. The engineering challenges associated with ocean alkalinity-based CDR approaches may, in the long term, constrain the scalability of this method of carbon storage. In the near term, it is important to understand the environmental impacts and beneficial effects of mitigating ocean acidification, together with their potential for social acceptance, and political and regulatory implementation.

There have been limited techno-economic assessments of ocean alkalinity enhancement proposals, and they are exclusively based on theoretical flow sheets (although many involve existing components or supply chains). However, these assessments provide approximate costs and energy requirements (Table 2.1).

2.1 →

Comparison of electrical and thermal energy requirements and financial costs of ocean alkalinity carbon storage technologies (adapted from Renforth & Henderson, 2017)

Comparison of electrical and thermal energy requirements and financial costs of ocean alkalinity carbon storage technologies (adapted from Renforth & Henderson, 2017)

2.3

2.3 —

Soil carbon sequestration

2.3.1

Introduction

Soil carbon sequestration for CDR involves making changes to land management practices that increase the carbon content of soil, resulting in a net removal of CO2 from the atmosphere (Paustian et al., 2016; Kolosz et al., 2019; Sanderman and Baldock, 2010). The stock of carbon in the soil over time is determined by the balance between carbon inputs from litter, residues, roots or manure, and losses of carbon, mostly through microbial respiration and decomposition, which is increased by soil disturbance (Coleman and Jenkinson, 1996).

Globally, terrestrial biotic carbon stocks include around 600 Gt carbon in plant biomass (mainly forest) and ca. 1,500 Gt carbon as organic matter within the soil to a total depth of around 1 meter (ca. 2,600 Gt C to 2m). The total annual fluxes moving between the atmosphere and land-based ecosystems (i.e., net primary productivity by plants and respiration by the soil biota) each equate to around 60 Gt C/yr (Le Quéré et al., 2016). These fluxes are mostly within balance; however, it is estimated that there is currently a net uptake (or “sink”) of carbon in biomass and soils of land-based ecosystems of approximately 1 – 2 Gt carbon (Le Quéré et al., 2016). It is thought that this “unmanaged” carbon sink is largely due to greater carbon uptake by forests and grasslands due to CO2 fertilization, increased atmospheric nitrogen deposition, and recovery of previously logged forests in parts of the Northern Hemisphere (Houghton et al., 1998). In contrast, historic land use change involving clearing of forests and plowing of prairies for new cropland is thought to have resulted in a total loss of 145 Gt C from the woody-based biomass and soils between 1850 and 2015 (Houghton and Nassikas, 2017). Over the past 12,000 years, land use and land cover change has resulted in an estimated loss of 133 Gt C from soils alone (Sanderman et al., 2017). Hence, most managed agricultural soils are depleted in carbon relative to the native ecosystems from which they were derived. The basis of soil carbon sequestration approaches is to reverse this historical trend and rebuild carbon stocks on managed lands.

In general, management practices that increase the inputs of carbon to soil, or reduce losses of carbon, promote soil carbon sequestration. There are many land management practices that can promote soil carbon sequestration (such as no-till agriculture, planting cover crops, and compost application) (Lal, 2013, 2011; Smith et al., 2014, 2008), some of which can also promote carbon sequestration in above-ground biomass (e.g., agroforestry practices). The goal of all of these approaches is to increase and maintain carbon stocks in the form of soil organic matter, which is derived from the photosynthetic uptake of carbon dioxide by plants. A key quantity of interest is the mean residence time, or how long organic matter remains in the soil. While small fractions of organic matter can have mean residence on the order of centuries, much of the organic matter in soils is relatively labile (prone to decomposition or transformation). Thus, the key to maintaining greater carbon storage in soils is to maintain long-term conservation practices (Sierra et al., 2018).

2.3.2

Current status

Several crop and soil management practices that can potentially increase soil carbon sequestration have been explored on a limited scale. For example, there is considerable research on the use of cover crops (plants grown after the primary food or fiber crops have been harvested, instead of leaving the soil bare), and many farmers are successfully using them, but currently less than 5 percent of the land used for annual crops in the U.S. includes cover crops in the planting rotation (Jian et al., 2020; Wade et al., 2020). Like cover crops, which prevent erosion and can build soil fertility by enhancing carbon and nitrogen stocks, many soil carbon sequestration activities are related to so-called conservation practices that increase or maintain soil health more generally. But various barriers limit the adoption rate of these methods. Farming is inherently risky and instituting major changes in management increases those risks. Strategies to reduce the risks of adopting conservation practices include improved technology, education, financial incentives to overcome the costs of management changes, and reform of crop insurance regulations (Paustian et al., 2016). Ideally, farmers and ranchers can be incentivized to transition to practices that create healthier soils, increase the stability of year-to-year crop yields, and reduce the need for purchased inputs like fertilizer. Incentives to maintain such practices are vital, either through increased profitability or with subsidies for improved environmental performance – or both. If, instead, a farmer reverts to conventional (non-conservation) practices, then much of the previously sequestered carbon may be lost back to the atmosphere, with minimal (or no) net climate benefit. Thus, to a large degree, the permanence of CDR through soil carbon sequestration is limited by socioeconomic and behavioral factors.

Rates for soil carbon sequestration vary considerably, depending on the climate, soil type, land use history, and management practices employed (Ogle et al., 2005; Paustian et al., 1997; Smith, 2012). As a rough approximation, best practices on land growing annual crops (such as barley and corn) can yield annual carbon sequestration rates up to 0.6 tC/ha/yr (around 2.2 tCO2/ha/yr), whereas conversion of tilled annual cropland to pastures, conservation buffers, or grassland set-asides can yield increases of 1 tC/ha/yr or more (NASEM 2019). Considerable care should be taken when extrapolating these example data points to larger-scale estimates due to variability across space, time, and depth.

2.3.3

Predicted scale and storage potential

The technical potential for soil carbon sequestration globally, assuming widespread adoption of certain practices, could be as high as 5 GtCO2eq per year, without considering economic constraints. (Note that mass of CO2 rather than of C is used when reporting effects on atmospheric CO2 stocks; Paustian et al., 2020; Smith, 2016.) According to the NASEM (2019) report, the conservative (lower) potential rate for CO2 removal given the current state of the technology, at a cost below $100 tCO2/yr, is around 3 GtCO2/yr, globally. New technologies, such as perennial grain crops and annual crop phenotypes with larger, deeper root systems, that lead to greater belowground carbon inputs (e.g., via increased mycorrhizal colonization, increased root sloughing and exudation, and/or roots with more recalcitrant tissues) could yield removal potentials as high as 8 GtCO2eq/yr globally (Paustian et al., 2016). For the U.S. alone, estimates of technical potentials are 240 – 800 Mt/y CO2eq, with the lower number representing widespread deployment of current conservation management practices while the higher level represents deployment of new technologies, such as enhanced root phenotypes for annual crops that have not yet been commercially developed. These upper bounds, especially, may not be achievable given socioeconomic constraints.

Sequestering soil organic carbon (SOC) can be an efficient and stable means of CDR because it has very long residence times, with bulk SOC residence times ranging from hundreds to thousands of years (Torn et al., 2009). It is also less vulnerable to ecosystem disturbances like wildfire and disease than forest biomass, and soil carbon release to the atmosphere, should it occur, is more gradual. Nevertheless, storage of soil organic carbon is at risk of reversibility. Concerns about permanence can be separated into biogeochemical (climate impacts and biological feedbacks) and socioeconomic factors (changes in land ownership and practices), either of which could impact the integrity of the soil carbon sink. For example, increases in temperature due to climate change can stimulate soil respiration (the release of carbon dioxide from soils) (Luo et al., 2001; Hicks Pries et al., 2017), counteracting practices that aim to reduce soil respiration rates as a means to increase soil carbon stocks. Socioeconomic factors, such as changes in land ownership, could cause the abandonment of soil carbon sequestering management practices – for example, if a new landowner chooses to revert to annual crops or conventional tillage – resulting in losses of previously stored soil organic carbon.

2.3.4

Current challenges to deployment

Barriers to implementation include:

Lack of implementation support and education among farmers about new practices;

Limited demonstration projects;

Lack of policy and financial incentives to help de-risk practice changes that may require several years to fully take effect;

Reliably attributing the incremental (or additional) benefit of specific actions when efforts involve changes to existing land management practices;

Incompletely defined and demonstrated monitoring and verification methods and costs (Smith et al., 2020); and

Difficulty guaranteeing the long-term (e.g., 100-year time horizon) integrity of stored soil carbon (Smith, 2012).

As discussed in Section 1.5, another critical challenge for soil projects is additionality: evaluating the degree to which sequestration occurred because of some intervention above and beyond what would have happened in a no-intervention baseline scenario. As many efforts involving soil carbon sequestration involve changes to existing practices, accurately accounting for the potential CDR benefit requires comparison to a counterfactual scenario – what would have happened otherwise – that can only be estimated, not observed (Haya et al., 2020).

Dedicated pilot projects and demonstration programs could help identify the measures required to overcome these barriers, with an emphasis on learning by doing and resolving key uncertainties through data acquisition and optimization of methods (Paustian et al., 2019; Vermeulen et al., 2019). Since soils have been managed for millennia, there is a high level of knowledge and readiness, with the potential to contribute to other global sustainability goals such as improved water quality, ecosystem restoration, biodiversity preservation, job creation, and increased yields/food security (Smith et al., 2019).

2.3.5

Current and estimated costs

The economic costs of establishing and maintaining large-scale soil carbon sequestration projects are uncertain, given that market experience consists mainly of a few pilot projects and academic studies. Tan et al. (2016) reviewed a number of economic analyses and pilot projects and, in 20 of the 21 studies reviewed, costs were less than $50/tCO2eq. By combining an economic model with empirical estimates of soil carbon sequestration rates, Smith et al. (2008) estimated an economic potential of between 1.5 and 2.6 GtCO2eq per year at carbon prices between 20 and 100/tCO2eq (Smith, 2016; Smith et al., 2008). Marginal costs ranged from negative to positive. Smith (2016) estimated that global soil carbon sequestration at a rate of 2.6 GtCO2eq per year would save a net $7.7 billion: $16.9 billion in savings minus costs of $9.2 billion.

Because costs vary widely across practices, geographies, and cropping systems, cost estimates typically assess total sequestration potential using marginal abatement cost curves from the literature bounded by a maximum average cost per tonne. Figure 2.6 shows Griscom et al.’s estimates for additional sequestration potential through soil carbon management practices such as improved grazing management and conservation agriculture in comparison to other land-based CDR approaches, bounded by average costs of $10 per tonne CO2eq (low cost) and $100 per tonne CO2eq (cost-effective) (Griscom et al., 2017; Bossio et al., 2020). This analysis also incorporates safeguards for fiber security, food security, and biodiversity conservation, which constrain the potential of sequestration approaches. While these estimates account for the costs of practice conversion and implementation, they do not account for the costs of other social, political, and educational programs or measurement and monitoring efforts that will likely be needed to scale these solutions (Schlesinger & Amundson, 2019). Additionally, much of the literature on the costs and potential of soil carbon approaches evaluates total mitigation potential (which includes avoided emissions) rather than additional sequestration exclusively. Using the Griscom et al. methodology, Bossio et al. estimate that CDR represents 60 percent (3.3 GtCO2eq/yr) of the total mitigation potential of soil carbon management (Bossio et al., 2020).

Currently there are a few soil carbon projects in the portfolios of voluntary emissions reduction or offset registries, such as VCS (Verra, 2020), and there are no soil carbon offsets being included in mandatory emission-reduction cap-and-trade programs (such as in California or the EU). Therefore, the per-tonne carbon value of soil sequestration remains low in the context of environmental markets. However, there has been some direct financing within voluntary emission-reduction markets: For example, 2016 yielded a total volume of 13.1 Mt CO2eq ($5.10/tCO2), approximating to $67 million. However, more than 95 percent of the emission reduction was from forestry biomass, with soils playing a very minor role. In the U.S., the federal government subsidizes soil conservation practices through the USDA, mainly on the basis of reductions in soil erosion and improved water quality. However, cost sharing and other subsidies indirectly benefit carbon sequestration, and Chambers et al. (2016) estimated that these programs resulted in increased storage of between 13 and 43 Mt C on U.S. agricultural lands at a cost for the assistance programs of approximately $60 million per year (2005-2014).

2.6 →

Scale and cost comparison for land-based carbon dioxide removal approaches. Removal potential is estimated for low-cost (< $10 MgCO2e−1 yr−1), cost-effective assuming a global ambition to hold warming to <2° C (<$100 MgCO2e−1 y−1), and maximum deployment with safeguards. Modified from Griscom et al., 2017.

Scale and cost comparison for land-based carbon dioxide removal approaches. Removal potential is estimated for low-cost (< $10 MgCO2e−1 yr−1), cost-effective assuming a global ambition to hold warming to <2° C (<$100 MgCO2e−1 y−1), and maximum deployment with safeguards. Modified from Griscom et al., 2017.

2.3.6

Example projects

As previously discussed, only a few projects are currently included in the portfolios of registries for voluntary crediting, and protocol systems thus far lack robust third-party verification and monitoring from financially disinterested parties. Evaluating the impact of pilot projects is challenging, given significant variability in outcomes, and will require careful sampling methods, baseline estimation, and interpretation that is guided, but not replaced, by models (Paustian et al., 2017; Campbell and Paustian et al., 2015). Some companies are also attempting to create financial incentives for soil carbon sequestration, but these efforts are at an early stage with uncertain futures, and thus far lack rigorous and transparent verification or validation.

2.4

2.4 —

Improved forest management, afforestation, and reforestation

2.4.1

Introduction

Terrestrial ecosystems remove around 30 percent of human CO2 emissions annually (~9.5 Gt CO2eq/yr over 2000 – 2007), and Earth’s forests accounted for the vast majority of this carbon uptake (~8.8 Gt CO2eq/yr over the same period) (Friedlingstein et al., 2014; Pan et al., 2011). Thus, forests may hold substantial potential for further carbon dioxide removal, particularly if actively managed with CDR in mind (Anderegg et al., 2020; Griscom et al., 2017b). We emphasize that preventing emissions by slowing or stopping deforestation (often referred to as “avoided conversion”) is another crucial climate change mitigation strategy, has generally much larger per-unit-of-land-area climate benefits than forest-based CDR approaches, and will have a much more rapid positive climate impact than forest-based CDR approaches. All forest-based CDR approaches take decades, and often more than 100 years, to have substantial radiative effects, whereas preventing deforestation starts reducing climate change immediately and maintains the co-benefits (e.g., biodiversity) of old-growth forests. Although discussed briefly in Section 3.2.1, avoided conversion is otherwise not a focus area for this primer.

Improved forest management (IFM) for CDR refers to active modification of forestry practices to promote greater forest biomass and carbon storage (Putz et al., 2008). Common IFM strategies include lengthening harvest schedules, thereby generally increasing the age and carbon storage of the forest on average; improved fire management; thinning and understory management; and improved tree plantation management (Griscom et al., 2017b; Griscom and Cortez, 2013; Putz et al., 2008). Afforestation refers to the establishment of new trees and forest cover (often monoculture plantations) in an area where forests have not existed recently, while reforestation refers to the replanting of trees on recently deforested land (Hamilton et al., 2010). Some ecologists question the feasibility or utility of large-scale afforestation (Lewis et al., 2019). The regeneration of a damaged or harvested forest is typically considered reforestation, but can also co-occur alongside other forms of improved forest management. Agroforestry practices entail the integration of trees into agricultural systems, in combination with crops, livestock, or both. Improved forest management, afforestation, reforestation, and agroforestry projects form part of several voluntary and mandatory carbon-offset trading schemes worldwide (Diaz et al., 2011; Miles et al., 2015).

From a carbon cycle perspective, reforestation and afforestation are forms of CDR in so far as new growth sequesters CO2 and accumulates new growth in the form of biomass. IFM projects are more complex because they not only include continued (or accelerated) sequestration from existing or new vegetation, but also claim to prevent (or decrease) emissions relative to what would otherwise have occured (CarbonPlan, 2020).

2.4.2

Current status

Improved forest management, afforestation, and reforestation could play a role in a near-term CDR portfolio given that these approaches do not rely on any future technological developments (Griscom et al., 2017b; NASEM, 2019b). Forest-based CDR projects totaled an estimated 90 MtCO2eq per year in 2015 and 2016; (the most recent years for which global data are available) and are a major component of California’s cap-and-trade system, making up the majority of carbon offsets as of 2019 (Anderegg et al., 2020; State of Forest Carbon Finance, 2017). Hundreds of CDR forestry projects have been deployed globally since 2000 through both voluntary and compliance markets, according to an analysis of 14 major registries and emissions trading schemes (State of Forest Carbon Finance 2017), although a global database of projects is not currently available.

2.4.3

Predicted scale and storage potential

Globally, the CDR potential for IFM, afforestation and reforestation has been estimated at between 4 and 12 GtCO2/yr (State of Forest Carbon Finance, 2017) and up to roughly 12.5 GtCO2/yr by 2030 at a carbon price of $100/tCO2eq/yr (Griscom et al., 2017a). One recent study (Fuss et al., 2018) reported cumulative potentials, with estimates for the year 2100 ranging from 80 to 260 GtCO2, although such a high-end scenario would require vast areas of land and could conflict with other uses, such as agriculture. In the U.S., the potential increase in carbon uptake ranges from 0.7 to 6.4 tC/ha/yr between a period of 50 and 100 years, as illustrated in Figure 2.6. While the global potential of reforestation alone has recently been estimated as very high (Bastin et al., 2019), widespread criticism of that work revealed fundamental methodological flaws in forest area and carbon calculations and a lack of accounting for biophysical feedbacks that could cancel out climate benefits, indicating that such high estimates are likely not credible (Veldmen et al., 2019; Lewis et al., 2019; Skidmore et al., 2019; Friedlingstein et al., 2019).

2.7 →

Changes in soil carbon stock from afforestation – shortleaf pine example from U.S. data in Smith et al., (2006)

Changes in soil carbon stock from afforestation – shortleaf pine example from U.S. data in Smith et al., (2006)

As is also discussed in Section 3.3.3, carbon sequestered from IFM, afforestation, and reforestation practices may be disrupted by socioeconomic and environmental risk factors that reduce permanence. Three further elements of IFM, afforestation, and reforestation that differ from most other CDR approaches are:

Tree growth takes a long time, usually decades, to sequester large amounts of CO2 (Zomer et al., 2017);

In the best-case scenario, with rigorous monitoring and strong contractual agreements around land use, the maximum duration of durable storage is likely to be around 100 years which is still orders of magnitude less than what’s offered by geological or mineral storage; and

Forest projects often have immense co-benefits beyond carbon storage, including benefits for biodiversity and conservation, ecosystem goods and services like water purification and pollination, and local and indigenous communities’ livelihoods (Anderegg et al., 2020).

2.4.4

Current challenges

Widespread deployment of IFM, afforestation and reforestation must respond to six important challenges: land competition, permanence risks, biophysical feedback to the climate, additionality, leakage, and ethically and socially responsible deployment. Many of these challenges are particularly acute for afforestation because it involves expanding forests into non-forest lands, which intensifies competition with other land uses, and such lands may not be climatically suitable for long-term forest stability, indicating much greater risks to the permanence of carbon storage. In organic soils, it may lead to soil carbon losses that cancel out carbon gains in biomass (Friggens et al., 2020). Many recent studies (Fargione et al., 2008; Griscom et al., 2017b, 2019) have explicitly avoided assessment and quantification of afforestation approaches, as these issues are particularly problematic and pose a challenge to implementation of forest-based CDR in mitigation strategies.

As with soils and as discussed in Section 1.4, attributing the incremental (or additional) benefit of specific actions, i.e., additionality, is another vexing problem for forest-based CDR, because so many efforts, including any IFM projects and some reforestation projects, involve changes to existing land management practices. Accounting for the potential CDR benefits of such changes requires comparison to a counterfactual scenario of no management change, which can only be estimated at regional scales, not directly observed. While afforestation may be more straightforward to demonstrate as new and additional, the feasibility and ecological suitability of this project category may be limited.

Competition for land and water used for food, fuel, and other natural resource production is an important concern for forest-based CDR approaches. With IFM, land competition is rarely an issue, as the land is already used for forestry. Achieving large carbon dioxide removal rates and volumes with reforestation and afforestation would require very large tracts of land – approximately 27.5 million ha for 1 Gt of CO2 removed (Houghton et al., 2015; NASEM, 2019) – and potentially large volumes of water (Smith et al., 2016a; Smith and Torn 2013; Trabucco et al., 2008). Land constraints could be reduced through agroforestry approaches with suitable crops like coffee and cacao; the challenges would be greater with modern staple crops like wheat, maize, soy, and rice. Many of the studies cited above used reasonable safeguards for food, textiles, and biodiversity. But competition for resources and the economic value of other land uses remain major constraints on the amount of land available for afforestation and reforestation (Lewis et al., 2019).

Climate change poses significant hazards to forest stability and permanence, which could substantially undermine their effectiveness in removing carbon (Anderegg et al., 2020). Ecological disturbances such as fire, hurricanes, droughts, and outbreaks of biotic agents (e.g., pests and pathogens) are a natural part of many ecosystems and should be factored into sequestration projections when disturbance regimes (probability and severity of disturbances) are constant over time (Pugh et al., 2019). Unfortunately, climate change is also greatly altering and increasing disturbances, particularly of wildfire, drought, and biotic agents (Dai, 2013; Williams & Abatzoglou, 2016; Williams et al., 2020; Anderegg et al., 2020). Increasing climate-driven disturbances will decrease the carbon-storage potential of forests and can even drive forests to become a net carbon source to the atmosphere (Kurz et al., 2008). These increasing risks to permanence must be accounted for in policy and project design, and more research is needed to quantify, forecast, and assess risks and how to mitigate them (Kurz et al., 2008b).

Beyond simply storing carbon, forests have other major impacts on global water and energy cycles, termed “biophysical feedbacks,” which mediate their net effect on the climate. In particular, changes in albedo – the degree to which Earth’s surface reflects solar energy – is considered one of the most prominent issues from a climate perspective and has been overlooked in some recent, exaggerated estimates of afforestation and reforestation potential (IPCC, 2019). Indeed, several studies with Earth system models have shown that an expansion of forest in the tropics would result in cooling, while afforestation in the boreal zone might have only a limited effect or might even result in global warming (Kreidenweis et al., 2016; Laguë et al., 2019; Jones et al., 2013a; Jones et al., 2013b). There are also significant uncertainties about the impacts on non-carbon dioxide greenhouse gases, emissions of volatile organic compounds, evapotranspiration (the combination of evaporation from land and transpiration from plants), and other issues (Anderegg et al., 2020; Benanti et al., 2014; Bright et al., 2015; Kirschbaum et al., 2011; Zhao and Jackson, 2014) that can influence the net climate effects of forestry projects.

Finally, obstacles may arise regarding monitoring and sustaining sequestered carbon in the long term due to carbon sink saturation, changing practices among forest managers and farmers, and creation of market and policy contingencies.

At least some of these six challenges may be tackled and minimized with improved science and an appropriate policy and regulatory framework. As previously mentioned, it is crucial to remember that forests also have the potential to contribute substantially to other global sustainability goals, particularly if co-benefits, such as biodiversity and managing for diverse and native forests, are included along with societal goals in project design and policies.

2.4.5

Current costs and estimated costs

Maximal costs of afforestation and reforestation have been estimated at $100 per tonne of sequestered CO2, though there is less agreement on the lowest potential cost, with the National Academy of Sciences (NASEM, 2015) quoting $1 and others citing a range of $18 – $20 per tonne of CO2 (Fuss et al., 2018). Crucially, most estimates indicate that it will be more costly to restore forests than to preserve existing ones, emphasizing the critical role of reducing deforestation compared to planting new forests (Reid et al., 2019).

2.4.6

Example projects

As discussed above, projects and policies involving IFM, reforestation and afforestation are in development around the world. Forest projects, and in particular IFM projects, make up a large fraction of compliance with California’s cap-and-trade system (California Air Resources Board, 2020), though as discussed above, significant challenges and concerns have been raised around how these programs address additionality, leakage, and permanence risks (Anderegg et al., 2020). The United Nations’ Trillion Tree Campaign (“The Trillion Tree Campaign,” 2020) aims to support tree-planting efforts around the world and claims that 13.6 billion trees have already been planted under its auspices (Goymer, 2018). Other IFM, afforestation and reforestation projects are ongoing through the UN REDD+ program, the Bonn Challenge, and other mechanisms (State of Carbon Finance 2017; Angelsen et al., 2018; Roopsind et al., 2019) but these programs, too, have related concerns (West et al., 2020).

2.5

2.5 —

Coastal blue carbon

2.5.1

Introduction

Coastal blue carbon refers to land use and management practices that increase the organic carbon stored in living plants or soils in vegetated, tidally-influenced coastal ecosystems such as marshes, mangroves, seagrasses, and other wetlands. These approaches are sometimes called “blue carbon” or “blue carbon ecosystems” but refer to coastal ecosystems instead of the open ocean (Crooks et al., 2019). Restoration of high-carbon-density, anaerobic ecosystems, including “inland organic soils and wetlands on mineral soils, coastal wetlands including mangrove forests, tidal marshes and seagrass meadows and constructed wetlands for wastewater treatment” can provide another form of biological CDR (IPCC, 2014). It is increasingly critical to not only preserve existing wetlands, but also to restore and construct these ecosystems for CDR given other co-benefits, including coastal adaptation and other ecosystem services (Barbier et al., 2011; Vegh et al., 2019). Although these ecosystems are very efficient and have high productivity rates, large uncertainties persist in the fraction of organic material that must be buried (sunk to the sea floor so that its carbon is sequestered) to ensure reliable CDR for macroalgal systems such as kelp (NASEM, 2019).

2.5.2

Current status

Global wetlands have been reported to store at least 44 percent of the world’s terrestrial biological carbon in vegetation, but primarily in deep stocks of soil organic carbon (Zedler and Kercher, 2005). These carbon stocks in peatlands and coastal wetlands are also vulnerable to reversal due to climate change and human activities (Parish et al., 2008). In fact, roughly one-third of global wetland ecosystems had been lost by 2009 (Hu et al., 2017), with coastal blue carbon ecosystems releasing on the order of 150 – 1050 MtCO2/yr globally due to drainage and excavation (Pendleton et al., 2012). These ecosystems also share significant carbon sequestration capacity under appropriate management and adaptation measures (Page and Hooijer, 2016). As more information about vegetation and soil organic carbon in wetlands has become available, this method has received more attention as a land mitigation option (IPCC 2014).

2.5.3

Potential scale and storage potential

The total carbon flux per year, and potential carbon impact of coastal blue carbon, is most influenced by the total area of coastal carbon ecosystems, the rate at which they bury organic carbon, and the capacity to implement approaches given potential barriers of managing, creating, and restoring areas for CDR (NASEM, 2019). Long-term sequestration rates in coastal wetlands are estimated from 1 – 8 tCO2/ha/yr (IPCC, 2014), a rate that significantly increases when emissions avoided from (previously degraded) restored wetlands are counted (Mitsch, 2012; Parish et al., 2008; Smith et al., 2008). The focus on coastal blue carbon reduces the potential for unintended and adverse emissions of non-carbon dioxide greenhouse gases (e.g., methane), as salinity of less than 18 psu (practical salinity units) is shown to significantly reduce or inhibit methane production (Poffenbarger et al., 2011). However, determining the limits of this salinity boundary can be challenging in scaling CDR. While freshwater wetlands also store significant amounts of carbon in above- and below-ground biomass, and in soil, they are estimated to be the source of 20 to 25 percent of global methane emissions (Mitsch et al., 2012). As a result, restoring some wetlands could induce a short-term net warming effect (Mitsch et al., 2012) due to increased emissions of methane and nitrous oxide, whereas restoring tidally-restricted wetlands would significantly decrease methane emissions (Kroeger et al., 2017).

At the national (U.S.) scale, NASEM (2019) highlighted several coastal blue carbon approaches for tidal wetland and seagrass ecosystem management that could contribute to net carbon dioxide removal and reliable sequestration of 5.4 Gt CO2 by 2100. These included restorations of former wetlands, use and creation of nature-based features in coastal resilience projects, managing the natural development of new wetlands as sea levels rise (migration), augmentation of engineered projects with carbon-rich materials, and management to prevent potential future losses and enhance gains in carbon capacity. At a global scale, it has been estimated that avoided coastal wetland and peatland impacts combined with coastal wetland and peat restoration could deliver 2.4 – 4.5 Gt/yr globally by 2030 (Griscom et al., 2017b). There is also the added potential to contribute to other global sustainability goals, such as improved water quality, ecosystem restoration, biodiversity preservation, job creation, and climate resilience (Barbier et al., 2011).

2.5.4

Current challenges

The impacts of changing ecosystem drivers that affect the rate and future of CO2 removal determine coastal blue carbon’s reliability as a long-term CDR approach. Ecosystem drivers include relative sea-level rise, temperature, light availability and watershed management, and coastal development activities that affect sediment availability, salinity, nutrient inputs, and available area for wetlands to migrate inland as sea level rises (NASEM, 2019). While integrated approaches that couple experiments, hierarchical approaches to scaling, and field-validated remote sensing have greatly improved our understanding of organic carbon accumulation and landscape-scale estimation of CO2 removal, research gaps persist. For example, the fate of organic carbon eroded from coastal wetlands and the effect of warming on plant production and decomposition for different types of coastal blue carbon ecosystems remain challenges to our understanding of future CDR capacity. Available lands that support migration of wetlands inland, but could be used for other purposes (e.g., agriculture, ports, industrial sites, and other high-value capital assets) may pose social and economic barriers and limit the extent to which these lands can be used for CDR (NASEM, 2019). Researchers are exploring other coastal blue carbon CDR approaches, such as expanding coastal wetland areas by beneficial use of carbon-rich materials, but they are in either the research or small-scale demonstration phase.